Der Physik Nobelpreis 2024 ging an John J. Hopfield und Geoffrey Hinton „für bahnbrechende Entdeckungen und Erfindungen, die maschinelles Lernen mit künstlichen neuronalen Netzen ermöglichen“.

Die Bilder und mathematischen Erläuterungen dieses Artikels sind dem Video A Brain-Inspired Algorithm For Memory entnommen, oder orientieren sich an der Presseerklärung des Nobelpreiskomitees.

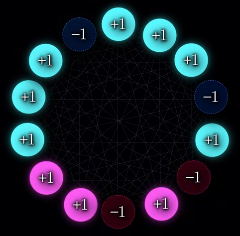

John Hopfield erfand 1982 ein Netzwerk, das eine Methode zum Speichern und Wiederherstellen von Mustern liefert. Wir können uns die Knoten des Netzwerks z.B. als Pixel eines Bildes vorstellen, wobei +1 einen schwarzen Pixel und -1 einen weißen Pixel repräsentiert.

Eine bestimmte Konfiguration in diesem Netzwerk wird durch eine Energie beschrieben, die der eines Systems winziger Magneten entspricht. In der Physik spricht man von Ising Spin Modellen. Das Hopfield Netz wird so trainiert, dass Werte für die Verbindungen zwischen den Knoten gefunden werden, die zu minimaler Energie führen. Es gilt:

$$ E= -\sum_{ij} w_{ij} x_i x_j $$

Für Verbindungen zwischen Knoten mit gleichem Vorzeichen muss \(\omega_{ij}\) also > 0 sein und für Verbindungen mit ungleichem Vorzeichen < 0 um die Energie zu minimieren.

Wenn das trainierte Hopfield-Netzwerk mit einem verzerrten oder unvollständigen Bild gefüttert wird, arbeitet es sich methodisch durch alle Knoten und aktualisiert die Werte, sodass die Energie des Netzwerks sinkt. Dazu berechnen wir zunächst für den zu verändernden Knoten \(i\) die gewichtete Summe h über alle Nachbarknoten. Ist \(h_i > 0\) , wird der Knoten auf +1 gesetzt und für \(h_i\ < 0\) auf -1.

$$ h_i = w_{i1}x_1 + \cdots + w_{iN}x_N = \sum_{j \neq i} w_{ij} x_j $$

Das Netzwerk arbeitet schrittweise, um das gespeicherte Bild zu finden, das dem unvollkommenen Bild, am ähnlichsten ist.

Doch wie sollen die Gewichtungen \(\omega\) gesetzt werden? Soll nur eine einzige Konfiguration \(\zeta\) gespeichert bzw. angelernt werden, gilt ein einfachster Ansatz \(\omega_{ij} = \zeta_i\zeta_j\) . Sollen mehrere sehr unterschiedliche Konfigurationen \(\zeta\) trainiert werden gilt \(\omega_{ij} = \zeta^1_{ij}+\zeta^2_{ij}+...\)- Positive Phase: Beginne mit einer Datenprobe und berechne die versteckten Aktivierungen.

- Negative Phase: Rekonstruiere die sichtbaren Einheiten aus den versteckten Aktivierungen und berechne dann erneut die versteckten Aktivierungen aus diesen Rekonstruktionen.

- Gewichtsaktualisierung: Passe die Gewichte basierend auf dem Unterschied zwischen der Datenverteilung und der rekonstruierten Verteilung an.

# ### Importing the libraries import numpy as np import pandas as pd import torch import torch.nn as nn import torch.nn.parallel import torch.optim as optim import torch.utils.data from torch.autograd import Variable # ### Importing the dataset and preparing the training set and the test set training_set = pd.read_csv('/kaggle/input/movielens-100k-dataset/ml-100k/u1.base', delimiter = '\t') training_set = np.array(training_set, dtype = 'int') # format of training_set is user id | movie id | rating | timestamp test_set = pd.read_csv('/kaggle/input/movielens-100k-dataset/ml-100k/u1.test', delimiter = '\t') test_set = np.array(test_set, dtype = 'int') # ### Getting the number of users and movies nb_users = int(max(max(training_set[:, 0], ), max(test_set[:, 0]))) # Calculate number of users nb_movies = int(max(max(training_set[:, 1], ), max(test_set[:, 1]))) # Calculate number of movies # ### Converting the data into an array with users in lines and movies in columns def convert(data): new_data = [] for id_users in range(1, nb_users + 1): id_movies = data[:, 1] [data[:, 0] == id_users] # Create boolean mask for all lines in 1st column matching user_id and transfer data of 2nd column to id_movies vector id_ratings = data[:, 2] [data[:, 0] == id_users] ratings = np.zeros(nb_movies) ratings[id_movies - 1] = id_ratings new_data.append(list(ratings)) return new_data # The reason why we make a list within a list is that torch wants the data in this structure training_set = convert(training_set) test_set = convert(test_set) # ### Converting the data into Torch tensors training_set = torch.FloatTensor(training_set) test_set = torch.FloatTensor(test_set) # Tensors are simply an multidimensional array containing elements of a single data type. They can be processed in gpu or tpu (Tensor Processing Unit). Tensorflow also has a tensor structure, but Pytorch is easier to implement. # ### Converting the ratings into binary ratings 1 (Liked) or 0 (Not Liked) training_set[training_set == 0] = -1 # not rated training_set[training_set == 1] = 0 # not liked training_set[training_set == 2] = 0 # not liked training_set[training_set >= 3] = 1 # liked test_set[test_set == 0] = -1 test_set[test_set == 1] = 0 test_set[test_set == 2] = 0 test_set[test_set >= 3] = 1

# ### Creating the architecture of the Neural Network class RBM(): def __init__(self, nv, nh): self.W = torch.randn(nh, nv) # Initializing the weight between nodes. Initial weight is according to the normal distribution in dimensions nh and nv. self.a = torch.randn(1, nh) # Initial bias value a for the visable node. 1 row and nh columns. Each element is a random number drawn from a standard normal distribution self.b = torch.randn(1, nv) # Initial bias value b for the hidden node def sample_h(self, x): # Sample_h will activate hidden nodes for certain probabilty using Boltzmann distribution wx = torch.mm(x, self.W.t()) # Multiplying the two tensors x and W transposed. activation = wx + self.a.expand_as(wx) # Adding bias a p_h_given_v = torch.sigmoid(activation) # Using sigmoid to calculate probability whether to activate the hidden node or not. return p_h_given_v, torch.bernoulli(p_h_given_v) # Values of the hidden nodes are retuned in 2nd vector. Bernoulli function is used to set the values according to probability p_h (given v). def sample_v(self, y): wy = torch.mm(y, self.W) # Multiplying the two tensors y and W. activation = wy + self.b.expand_as(wy) # Adding bias b p_v_given_h = torch.sigmoid(activation) # Activation return p_v_given_h, torch.bernoulli(p_v_given_h) def train(self, v0, vk, ph0, phk): # Next, contrastive divergence will be performed. # v0 is the input vector for visbile nodes, vk is the result after k samplings, ph0 is the probability vector of the hidden, phk is the probability vector after k samplings self.W += (torch.mm(v0.t(), ph0) - torch.mm(vk.t(), phk)).t() # Updating the weight self.b += torch.sum((v0 - vk), 0) # Updating b, 0 is only added to make the size 2 self.a += torch.sum((ph0 - phk), 0) # Updating a nv = len(training_set[0])# Number of visible nodes nh = 100 # Define number of hidden nodes batch_size = 100 # Define batch size for training rbm = RBM(nv, nh)

# ### Training the RBM nb_epoch = 10 # Define number of complete passes through entire training data set for epoch in range(1, nb_epoch + 1): train_loss = 0 s = 0. for id_user in range(0, nb_users - batch_size, batch_size): # Training in batches. This training can also be done individually vk = training_set[id_user : id_user + batch_size] v0 = training_set[id_user : id_user + batch_size] ph0,_ = rbm.sample_h(v0) # Calculate initial probabilty to set hidden nodes for k in range(10): # Gibss sampling (markov chain monte carlo) for contrastive divergence. _,hk = rbm.sample_h(vk) # set the hidden node according to Bernoulli distribution _,vk = rbm.sample_v(hk) # set the visible node vk[v0<0] = v0[v0<0] # transfer -1 (unrated) from v0 to vk phk,_ = rbm.sample_h(vk) # Probability to set hidden node after k training steps. rbm.train(v0, vk, ph0, phk) # Update W, a and b train_loss += torch.mean(torch.abs(v0[v0 >= 0] - vk[v0 >= 0])) # calculate 'training loss' by taking the difference between the initial value and the subsequent values for ratings == 1. s += 1. print('epoch: '+str(epoch)+' loss: '+str(train_loss/s)) # Print normalised loss value

# ### Testing the RBM with a different dataset (test data set) test_loss = 0 s = 0. for id_user in range(nb_users): v = training_set[id_user:id_user+1] vt = test_set[id_user:id_user+1] # Save training_set and test_set of user 'id_user' as 2D array in v and vt if len(vt[vt>=0]) > 0: _,h = rbm.sample_h(v) # flip hidden neurons according to trained probability based on W and a _,v = rbm.sample_v(h) # flip visible neurons based on W and b test_loss += torch.mean(torch.abs(vt[vt>=0] - v[vt>=0]))# calculate the mean value of changes for visible neurons rated with 3 in test dataset s += 1. print('test loss: '+str(test_loss/s)) # print out normlized average change when using test data set instead of training set

# # Proposing new movies for a specific user based on his/her presvious ratings user_ID=67 # select user_ID # load dataset containing list of: movie id | movie title | release date | video release date | IMDb URL | unknown | Action | Adventure | Animation | Children's | Comedy | Crime | Documentary | Drama | Fantasy |Film-Noir | Horror | Musical | Mystery | Romance | Sci-Fi |Thriller | War | Western movie_set = pd.read_csv('/kaggle/input/mymod-dataset/u3.item', delimiter = '|') movie_set = np.array(movie_set) v = training_set[user_ID:user_ID+1] # select vector of user in training set v0=v # store original vector in v0 if len(v[v>=0]) > 0: _,h = rbm.sample_h(v) # flip h _,v = rbm.sample_v(h) # flip v v[v0<0] = v0[v0<0] # keep non rated or non liked moves as non raeted or non liked #print rated movies pos = (v0[0]== 1).nonzero(as_tuple=True) #select positions in training set rated w/ 3 stars movies = [movie_set[i][1] for i in pos[0]] #look up in position in movie_set print ("rated movies:") for movie in movies: print(movie) dv=v-v0 # calculate changes in rating #print proposals for new movies pos = (dv[0]== 1).nonzero(as_tuple=True) #select positions in training set rated w/ 3 stars movies = [movie_set[i][1] for i in pos[0]] #look up in position in movie_set print ("\n"+"new proposed movies:") for movie in movies: print(movie)

Keine Kommentare:

Kommentar veröffentlichen